By Deborah Barsky and Jan Ritch-Frel

Recent developments in the study of human prehistory hold clues about our times, our world, and ourselves.

We can all agree that most people want to know about their origins—spanning from their family and ancestral history and even, occasionally, deeper into the evolutionary story.

Lately, this desire has become more palpable in society at large and even taken on urgent tones as we drift away from the lifestyle patterns and traditions that humans relied on for millions of years toward a technoculture that is highly addictive, and hard to understand or break away from.

But the desire to know the deep past doesn’t translate so easily into understanding, especially since the information we encounter is necessarily filtered by our own sociohistorical context. One of the biggest obstacles to gaining a true understanding of the unfolding of humanity’s past is the way that modern societies foster a superficial understanding of the passage of time.

To delve deeply into human prehistory requires adopting a different kind of chronological stance than most of us are accustomed to—not just a longer period of time, but also a sense of evolution infused by the operating rules of biology and its externalities, such as technology and culture. But exploring the past enables us to observe long-term evolutionary trends that are also pertinent in today’s world, elucidating that novel technological behaviors that our ancestors adopted and transformed into culture were not necessarily better, nor more sustainable over time.

Nature is indifferent to the recency of things: whatever promotes our survival is passed on and proliferated through future generations. This Darwinian axiom includes not only anatomical traits, but also cultural norms and technologies.

Shared culture and technologies give people the ongoing sensation of the synchronization of time with each other. The museums and historical sites we visit, as well as the books and documentaries on the human story, overwhelmingly present the past to their audiences through simultaneous or synchronized stages that follow a kind of metric system of conformity in importance. Human events are charted along the direction of either progress or failure.

The archeological record shows us, however, that even though human evolution appears to have taken place as a series of sequential stages advancing our species toward “progress,” in fact, there is no inherent hierarchy to these processes of development.

This takes a while to sink in, especially if you’ve been educated within a cultural framework that explains prehistory as a linear and codependent set of chronological milestones, whose successive stages may be understood by historically elaborated logical systems of cause and effect. It takes an intellectual leap to reject such hierarchical constructions of prehistory and to perceive the past as a diachronous system of nonsynchronous events closely tied to ecological and biological phenomena.

But this endeavor is well worth the effort if it allows people to recognize and make use of the lessons that can be learned from the past.

If we can pinpoint the time, place, and circumstances under which specific technological or social behaviors were adopted by hominins and then follow their evolution through time, then we can more easily understand not only why they were selected in the first place, but also how they evolved and even what their links with the modern human condition may be.

Taking on this approach can help us understand how the reproductive success of our genus, Homo, eventually led up to the emergence of our own species, sapiens, through a complex process that caused some traits to disappear or be replaced, while others were transformed or perpetuated into defining human traits.

While new discoveries are popularizing the exciting new findings dating as far back as the Middle Paleolithic, the public is typically presented with a compressed prehistory that starts at the end of the last ice age some 12,000 years ago. This is understandable, since the more recent archeological register consists of objects and buildings that are in many ways analogous to our own patterns of living. Ignoring the more distant phases of the shared human past, however, has a wider effect of converting our interpretations of prehistory into a sort of timeless mass, almost totally lacking in chronological and even geographical context.

Among recent breakthroughs reaching the public eye, it has been shown that H. sapiens emerged in Africa much earlier than previously thought, some 300,000 years ago. We now know that the first groups of anatomically modern humans arrived on the northern shores of the Mediterranean Sea as early as 200,000 years ago, a fact that implies a far longer cohabitation of our species in territories already occupied by other forms of Homo, such as the Neandertals and the Denisovans.

Genomic research is progressively telling us something about what our interactions with these species might have been like, proving not only that these encounters took place, but even that they sometimes involved interbreeding and the conceiving of reproductively viable offspring. Such knowledge about our distant past is therefore making us keenly aware that we only very recently became the last surviving species of a very bushy human family tree.

Because of their great antiquity, these very ancient phases of the human evolutionary story are more difficult to interpret and involve hominins who were physically, cognitively, and behaviorally very different from ourselves.

For this reason, events postdating the onset of the Neolithic Period tend to be more readily shared in our society’s communication venues (e.g., museums and schools), while the older phases of human prehistory often remain shrouded in scientific journals, inaccessible to the general public.

But rendering prehistory without providing the complete picture of the evidence is like reading only the last chapter of a book. In this truncated vision, the vast majority of human development becomes a mere prelude before we move on to be amazed at how modern humans began to create monumental structures, sewage systems, and grain storage silos, for example. Just how we got there remains largely undisclosed to the public at large.

Bringing Prehistory Into the Open

The good news is that the rapid development of modern technologies is presently revolutionizing archeology and the ways that scientific data can be conveyed to society. This revolution is finally making ancient human prehistory understandable to a wider audience.

While many of the world’s prehistory museums still display only the most spectacular finds of classical or other “recent” forms of modern human archeology, we are finally beginning to see more exhibits dedicated to some of the older chapters of the human story. By generating awareness, the public is finally awakening to their meaning and significance, enabling themselves to gain a better understanding of the global condition of humanity and its links with the past.

People are finally beginning to understand why the emergence of the first stone tool technologies some 3 million years ago in Africa was such a landmark innovation that would eventually embark our ancestors onto an alternative evolutionary route that would sharply distinguish us from all other species on the planet.

By developing their stone tool technologies, early hominins provided the basis for what would eventually be recognized as a culture: a transformative trait that transformed us into the technology-dependent species we have become and that continues to shape our lives in unpredictable ways.

Archeologists provide interpretations of these first phases of the human technological adventure thanks to the stone tools left behind by hominins very different from ourselves and the contexts in which they are discovered. Among the authors of these groundbreaking ancient technologies are Homo habilis, the first species attributed to our genus—precisely because of their ability to intentionally modify stone into tools—but also other non-Homo primates, such as Paranthropus and Australopithecines, with whom they shared the African landscape for many millennia.

Surprisingly, even at a very early stage beginning some 2,600,000 years ago in Africa, scientists have found that some hominins were systematizing stone toolmaking into a coherent cultural complex grouped under the denomination “Oldowan,” after the eponymous sites situated at Olduvai Gorge in Tanzania. This implies that stone toolmaking was being transformed at a very early date into an adaptive strategy, because it must have provided hominins with some advantages. From this time onward, our ancestors continued to produce and transmit culture with increasing intensity, a phenomenon that was eventually accompanied by demographic growth and expansions into new lands beyond Africa—as their nascent technologies transformed every aspect of their lives.

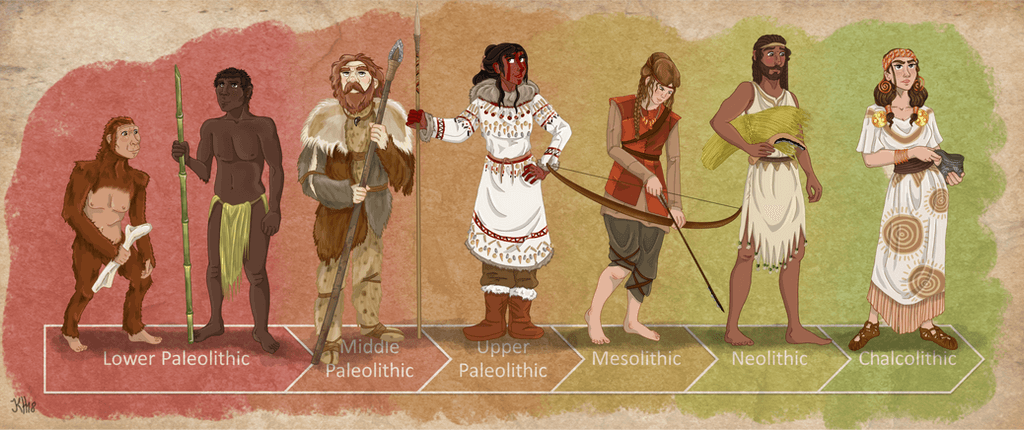

Unevenly through time and space, this hugely significant development branched out into the increasingly diverse manifestations of culture that came to characterize the successive hominin species composing the human family tree. Each technocomplex of the Lower Paleolithic, from the Oldowan to the subsequent Acheulian phase (beginning in Africa some 1,750,000 years ago and then spreading into Eurasia up to around 350,000 years ago), and onward into the Middle Paleolithic and beyond, is defined by specific sets of skills and accompanying behavioral shifts. The tools developed in service of those skills reveal to us the sociocultural practices of the hominins who used them.

Fossilized human remains, and the stone tool technologies they developed, provide the keys to understanding more about ourselves. We can comprehend the changes we observe in the archeological register through time thanks to the bodies of material evidence that tell the story of how humans evolved up to the present. It gives us a frame of reference to recognize the directions that our species might be taking as we move into the future.

To see more clearly, we need to explore how this evolution took place, understanding the transformations diachronically, with change often occurring in nonlinear ways. To do so, we need to leave behind models of path dependence that condition our thinking, leading us to believe that particular aspects recognizable to us through our lens of modernity have a forcing effect of change on the next stages of technosocial development.

Human prehistory widens our conceptual lens by taking into consideration not only innate human traits particular to each phase of hominin ancestral evolution, but also the exterior forces at play throughout the shifting climatic conditions that characterize the long time periods we are considering.

In much the same way as biological evolution, some technosocial innovations can emerge and persist, while others may remain latent in the human developmental repertory, providing a baseline for new creations that can be further developed. If proven to be favorable under specific conditions, selected behavioral capacities can be developed to the point of becoming defining aspects of the human condition.

The latent aspects of technology can, in different regions or time frames, be selected for, used, and refined, leading human groups to choose divergent evolutionary pathways and even triggering technological revolutions: when the changes lead to positive results, they can set off wider cultural developments in the populations that use them.

This way of thinking about technosocial evolution also helps to explain why, more often than not, specific cultural phases generally appear in some kind of coherent successive order through space and time, even though the transitions from one to the other—and the related social processes they engender—can appear blurry as we try to make sense of the archeological evidence.

In this case, it is essential to keep in mind that, through time, different hominins also evolved biologically, as toolmaking and its associated social implications had effects on the evolution of the brain. Developing stone tool technologies provided hominins with an evolutionary edge, enabling them to carve out a unique niche in the scheme of things since it improved their capacity to compete for resources with other kinds of animals. Technological and behavioral developments occurred and evolved in a nonlinear fashion because they were unevenly packed in accordance with each specific paleoecological and community setting.

When we look deeper into our prehistory, it is important to remember that the degree of complexity of human achievements was largely dependent upon particular stages of cognitive readiness. Human technosocial evolution thus appears to have global coherency through time because it reflects the successive phases of cognitive readiness attained on an anatomical level by distinct groups of hominins thriving in different paleoecological settings in diverse geographical regions.

While drawing straight lines between specific hominin species and particular kinds of tools presents some pitfalls, science has already demonstrated that cerebral development was (and is) tightly linked to technological evolution. Specific areas of the brain—the neocortical regions of the frontal and temporal lobes responsible for language, symbolic thought, volumetric planning, and other abstract cerebral functions—were merged with toolmaking. Toolmaking contributed to endowing hominins with unique cerebral capacities—in particular, the abilities to communicate complex abstract notions and create multifaceted sociocultural environments.

Different types of symbolic behavior—the use of a system of symbols to communicate—were employed by different hominin species who found them to be positively adaptive. As a result, cerebral and technological evolution were linked into a co-evolutionary process by which early Homo and subsequent hominins developed idiosyncratic brain structures relative to other animals.

Following the Oldowan, the Acheulian cultural phase is commonly (but not uniquely) linked with the arrival of the successful and widely dispersed Homo erectus. It is during this era that humanity produced some of its most significant technological and behavioral breakthroughs, like fire making and the capacity to predetermine the forms they created in stone. The archeological record attributed to the Acheulian bears witness to advanced technosocial standardization, with the advent of symmetrical tools like spheroids or handaxes attesting to the emergence of aesthetic sensitivity.

The expanding repertory of tool types that appeared at this time suggests that hominins were carrying out more diverse activities, while subtle differences observed in the ways of making and doing began to appear in specific regions, forming the foundation of land-linked traditions and social identities.

The fact that these breakthroughs occurred on comparable timescales in widely separate regions of the globe—South Africa, East Africa, the Middle East, and the Indian subcontinent—underpins that hominins already living in these regions had reached a comparable stage of cognitive readiness and that the specific conditions favoring the emergence of analogous latent technosocial capacities were ripe for the taking. The huge expanses separating the geographical hotbeds suggest that the Acheulian emerged without interpopulational contact.

The explanation that better fits the evidence is that there was a convergent development in the transition from a fairly simple form of Oldowan stone toolmaking to the more complex and sophisticated Acheulian—when Oldowan toolmakers spread out over the planet, they carried the seeds of the Acheulian with them in their minds, their culture, and in the shapes of the stone tools they brought with them.

Indeed, it was only during the later phases of the Acheulian, when we observe denser demographic trends in Africa and Eurasia, that hominin populations would have developed the social networking necessary for technologies to migrate from place to place through direct communication networking.

A similar process of latency and development is in fact observed even in more recent phases of the human evolutionary process—for example, with the emergence of such complex technosocial achievements as the intentional burial of congeners, the construction of monumental structures, the practices of agriculture and animal husbandry, or the invention of writing.

A diachronous approach to time permits more valuable insights from 7 million years of evidence we have of human development. How we structure our understanding of it can create big opportunities to have a better future.

Deborah Barsky is a researcher at the Catalan Institute of Human Paleoecology and Social Evolution and associate professor at the Rovira i Virgili University in Tarragona, Spain, with the Open University of Catalonia (UOC). She is the author of Human Prehistory: Exploring the Past to Understand the Future (Cambridge University Press, 2022).

Jan Ritch-Frel is the executive director of the Independent Media Institute, and a co-founder of the Human Bridges project.

Source: Independent Media Institute

This article was produced by Human Bridges, a project of the Independent Media Institute.